Greasy Fork is available in English.

Add / Remove script into / from script set directly in GF script info page

< Valutazione su Greasyfork script-set-edit button

You could add an endpoint for each user to show a .json list of all sets and each link/id for each script in the set, or if that is too hard and time consuming to do another option that wouldn't require you to do anything would be for this script dev to fetch all sets once, an store the script id/link for all scripts in the set, then if that set/link is opened again, there is no need to fetch the set again, as he could simply use the data that is already stored, then if a user removes a script from a set while in the script or the set page, he just has to track that change, this would make the script only make "1" fetch request after the user installed the script.

I've tried to resolve it, but failed. In one hand, there is no API for managing greasyfork's script sets, only requesting webpage and simulating form submission of script set page has the ability to view and modify script sets data. In another hand, users can and may edit script set content in a context without this userscript running (for example, in another browser or device that has this userscript not installed), so tracking the modification of script set is not possible (or at least not possible for accurate tracking). Due to these issues, I made this script automatically sync script sets and their contents each time the userscript is loaded, which resulted in a large number of requests.

It indeed caused some users to get 503 error, and I've tried to figure out some way to reduce web requests, but somehow I couldn't make it. I don't know how to reduce request numbers without the two issues above resolved.

If greasyfork can provide an API to manage script sets, it may be possible to bundle several requests into one, and reduce many of the requests. The API can be simple, just like @hacker09 said, providing a .json file that contains all script sets and their data can be useful. In that case, I can reduce requests by roughly N/N+1 if the user have N sets in profile (by reducing requests from 1 request for sets list and N requests for sets' content to 1 reqeust for the .json file).

For that case, create a manual button, or delay with a few days, that would automatically do a whole bunch of network requests, like once a week.

That way you can also track changes in other browsers etc etc...

Each time is too much, as I often open 30+ script pages, so an obvious delay of at least an hour should take place, but again some things may not be accurate for 1 hr.

Another option is, do a bunch of requests when the script is installed, then only when remove/add is actually clicked, so there's absolutely no need to make so many requests on each page load.

My last sentence also supposes that the user won't use another browser just to manually add/remove a script from a set.

If I added an API to get the list of scripts directly added to a set (as opposed to those added as part of a calculation), would that be helpful?

Thanks for your reply, but somehow I think that would not be helpful particularly in reducing request numbers.

To make it clear, this userscript get the script sets' data in the following way:

For a user with N sets, the userscript:

#user-script-sets#script-set-scripts>input[name="scripts-included[]"]each time we do a sync.

You can see most requests are made in step 2, where we have to do N requests just to figure out whether a script is in user's sets, and which set(s) it is in.

To reduce request numbers, an API could be helpful if it provides the ability to get the list of sets of given script that is included in. In that case, I can reduce request numbers from N to 1 in step 2. Or, alternatively, a .json file of all sets with their content is still helpful.

Thanks again for your help!

I'm sorry I made a mistake here:

...this step aims to get content of sets (no matter it's directly added to the set or added as part of a calculation), so we can know whether a script is in this set, and display a "[✔]" if script is in set

Actually it should be:

...this step aims to get scripts directly added of sets, so we can know whether a script is in this set, and display a "[✔]" if script is in set

So after correcting this, if there's an API to get the list of scripts directly added to a set (as opposed to those added as part of a calculation), I can replace requests to set edit pages with requests to the API, but that won't decrease request number.

So the conclusion isn't changed, to reduce request number, some other kind of API is needed:

To reduce request numbers, an API could be helpful if it provides the ability to get the list of sets of given script that is included in. In that case, I can reduce request numbers from N to 1 in step 2. Or, alternatively, a .json file of all sets with their content is still helpful.

Why you don't cache the information in sessionStorage?

You don't need to query all script sets in every browse action.

You can just query once (with sufficient delay before moving to next network request)

Then store them in either GM values or sessionStorage.

If the editing is done by your script, your script itself can know which is changed and then update the corresponding entry.

If the editing is outside your control, you can add a manual refresh button and refresh the script sets upon the button is pressed.

Right now, if the user created 10 script sets, then your script will make 10 requests + the base request on each page navigation in Greasy Fork.

怕英文看不明白,發個中文的說明。

為什麼不使用sessionStorage把script sets的資訊cache起來?

您不需要在每個網頁瀏覽操作中查詢所有script sets。

您只需查詢一次(註:在每個網路請求之間,需保持一定的延遲)

然後將它們儲存在 GM value 或 sessionStorage 中。

如果編輯是由您的腳本完成的,您的腳本本身可以知道哪些內容已更改,然後更新相應的項目。

如果編輯不在你的腳本控制範圍,您可以新增手動刷新按鈕,並在用戶按下按鈕時更新script set。

現在,如果使用者建立了 10 個script set,在Greasy Fork 中瀏覽每個網頁頁面,您的腳本將會發出 10 個請求 + 1個基本請求。

那個"基本請求"是查詢用戶頁面然後取得script sets的資料吧

這個也很無謂啦,也就是上面講的 可以cache跟手動更新。 不需要每次查詢

The "base request" is the fetching of user page to get script sets, right?

This is also unnecessary. As above said, you can just do caching and manual refresh. You don't need to query the user page in every navigation.

@ 𝖢𝖸 𝖥𝗎𝗇𝗀 感谢您的建议,我会考虑使用缓存+手动策略解决这个问题。但同时(正如上面讨论的)如果JasonBarnabe可以提供一个用于解决此问题的新API,我会更加愿意利用新API而不是缓存策略来解决,因为这样可以在减少网络请求的同时保障数据完全的准确性。如果确定会有这个新的API,我会调整网络请求所用策略到使用这个新的API,同时减少请求数量和频率;如果短期内不会有新的API,我会实现这个缓存策略,达到减少网络请求的目标。

I'll consider the strategy of caching data with manual refresh. If there won't be a new API to help with this issue in recent days, I'll take action to implement the caching strategy. But if JasonBarnabe provided a suitable API, I'll reduce requests with the new API, which can reduce requests and preserve accuracy in the same time.

There is now an API at https://greasyfork.org/en/users/1-jasonbarnabe/sets (only accessible to the user themselves) that lists all the user's sets and the scripts within.

I would still recommend you implement caching because this request can be difficult on the server depending on the number of sets, how many scripts are included, and how they are included.

I've just tried the new API at https://greasyfork.org/zh-CN/users/667968-pyudng/sets, https://greasyfork.org/en/users/667968-pyudng/sets, https://greasyfork.org/en/users/667968-pyudng/sets/?locale_override=1, and https://greasyfork.org/users/667968-pyudng/sets, but both returned me a HTTP ERROR 500. Is there anything wrong?

I've just updated the script with caching included, now the script should only auto-sync at most once a day. Send feedbacks if something is not working well, including bugs, auto-sync frequency adjustments and anything else.

Besides, I know only caching can not solve all problems. For users who has really a large amount of sets (like 8-decembre), syncing still makes a lot of request in a short time. I'm still looking forward for the new API.

I've just tried the new API at https://greasyfork.org/zh-CN/users/667968-pyudng/sets, https://greasyfork.org/en/users/667968-pyudng/sets, https://greasyfork.org/en/users/667968-pyudng/sets/?locale_override=1, and https://greasyfork.org/users/667968-pyudng/sets, but both returned me a HTTP ERROR 500. Is there anything wrong?

There was an error for sets that included or excluded other sets. I've made an adjustment - try it now.

Thanks for @JasonBarnabe 's API support. I've just updated the script to use this api, and still provides old data fetching method as a fallback (turn off the 'Use GF API' switch on Tampermonkey's menu command to use this fallback). Also, the caching strategy still applies, which means auto-syncing with api should only works once per day. I'd like to get feedbacks if everything works fine, especially whether 503 error problems are solved. Thanks again for all of you!

Thank both for this last update:

Seems work great now!

@decembre Thanks for your active feedbacks and debug info over the past period of time, but somehow the problem was too difficult for me to solve it by myself. I've always felt sorry when I thought about you even deleted some of your own sets just to try to solve the problem but I can do nothing to help. However, thanks to JasonBarnabe's help and your support we could finally make it, you're awesome!

:-)

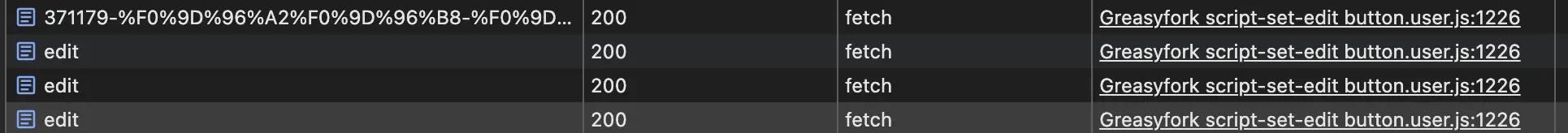

This script causes users to get 503 errors due to excessive requests to the script set edit page. I've seen it in the logs causing upwards of 50 requests per second.

Can this be adjusted to.... not do that? Is there an alternative API that I could implement that you could use instead?